Evospnet - Evolutionary Split Networks

Training Methodology

Training MethodologyThe Requirement:

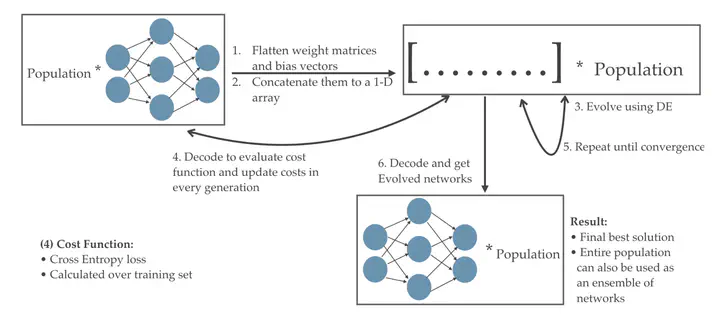

Gradient descent may lead to solutions being stuck at a local minima. Evolutionary algorithms on the other hand have a much better chance of not being stuck. Uisng these algorithms in the context of Neural Networks is called Neuroevolution. However, Neuroevolution suffers from extremely high training times and reduced performance with the increase in the dimensionality of a Neural Network. The algorithms used to evolve the weights requires the computing of the loss function very often, which compels one to use more resources to reduce time.

How did I solve it?

- To tackle the dimensionality and resource issues, I propose to split a neural network into parts which are evolved and re-combined itertively via a step-wise evolutionary strategy, aiming to achieve a definitely better solution as compared to it’s population generated by different initialization schemes.

- Empirically, it is shown that the Evopsnet performs better than regular Neuroevolution via Differential Evolution.

- The paper is available here.

- The code is available here.

Technologies:

- Programming Languages: Python

- ML Models: PyTorch, Scipy

Team: Abhimanyu Bellam